Cocos2dx中Android相机调用

前言:因项目需求,需要在Cocos2dx中调用Android摄像头,但Cocos2dx中自身并没有API可调用,于是产生了这篇博文,这应该是我第一篇正式写博文,如有问题,请大家多多指教

另外,Android开发有两种常用IDE,Eclipse和Android Studio,其实两种IDE调用Android Camera都差不多,本文采用Android Studio作介绍

实际上,调用Android有很多种方式,我使用的主要有两种

- 使用OpenCV Native Camera Library 来调用Android摄像头

- 使用Vuforia SDK 来调用Android摄像头

上面两种方式都是基于第三方库来实现的,你也可以直接使用Android Camera API去实现,先说说两种调用方式的优缺点,

第一种方式:OpenCV方式不支持Android SDK Version > 5.0, 也就是说仅支持Android 5.0以前的版本,但是这种方式尤为简单,代码量很少就可以实现

第二种方式:Vuforia方式支持的版本就很多,目前最新的Android版本都支持,但是改动太过复杂,而且容易产生多线程的问题,所以要小心又小心

接下来,写写自己的实现步骤

采用Vuforia SDK

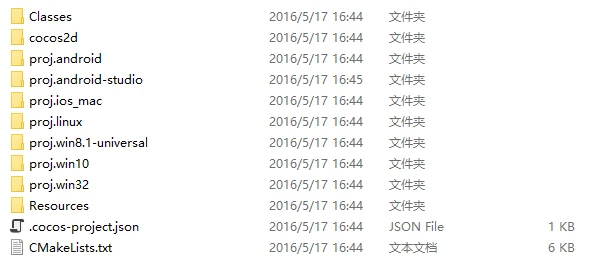

新建一个Cocos2dx工程cpp-vuforia-camera,文件目录为

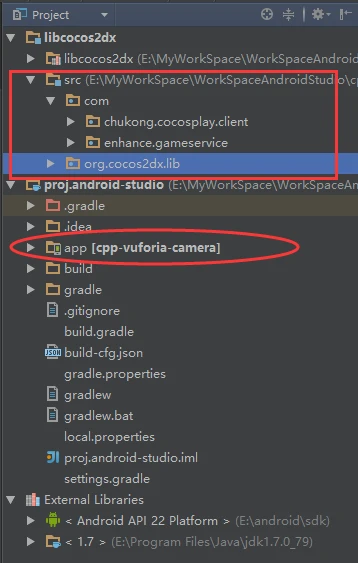

将工程目录下的AS工程导入到Android Studio后,文件目录为

重点关注上图中红框里的内容

在工程中,先导入Vuforia.jar,这样才能在java层调用Vuforia API

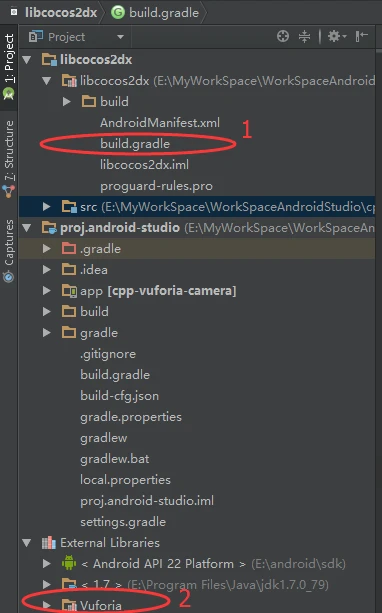

在上图1号红框中,build.gradle文件中添加下面Vuforia三个全局宏定义,根据你自己的Vuforia去修改

def VUFORIA_SDK_ROOT = "E:\\Libraries\\vuforia-sdk-android-5-5-9"

def VUFORIA_NATIVE_LIB = "build\\lib"

def VUFORIA_JAR = "build/java/vuforia"在dependencies里添加下面一行

compile files(“$VUFORIA_SDK_ROOT/$VUFORIA_JAR/Vuforia.jar”);

最后对gradle进行sync now,同步一下,就会产生形成上图红框2的Vuforia.jar

在该build.gradle同级目录的AndroidManifest.xml添加相机访问权限

<!--

The application requires a camera.

NOTE: Any application that requests the CAMERA permission but does not

declare any camera features with the <uses-feature> element will be

assumed to use all camera features (auto-focus and flash). Thus, the

application will not be compatible with devices that do not support

all camera features. Please use <uses-feature> to declare only the

camera features that your application does need. For instance, if you

request the CAMERA permission, but you do not need auto-focus or

flash, then declare only the android.hardware.camera feature. The

other camera features that you do not request will no longer be

assumed as required.

-->

<user-feature android:name="android.hardware.camera2"/>

<!--Add this permission to get access to the camera.-->

<uses-permission android:name="android.permission.CAMERA" />

<!--Add this permission to allow opening network sockets.-->

<uses-permission android:name="android.permission.INTERNET"/>

<!-- Add this permission to check which network access properties (e.g.

active type: 3G/WiFi). -->

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/>

<uses-feature android:name="android.hardware.camera.front" android:required="false" />接下来,添加Vuforia访问相机的API,Vuforia官方源码里提供一种访问相机机制,我们可拿来直接使用,不过还是需要Vuforia秘钥来进行初始化,可以注册个Vuforia账号来获取

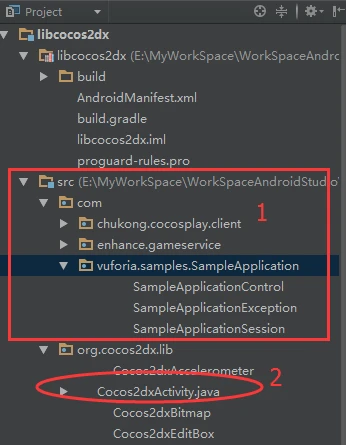

添加Vuforia的接口之后的目录如上图红方框1所示

注释掉SampleApplicationControl.java文件里除onInitARDone和onVuforiaUpdate方法之外的其他方法

在SampleApplicationSession.java中有该接口的调用,自然要注释掉上面已注释的方法的实现,同时还要注释掉R包,getInitializationErrorString该方法调用了R包里的一些字符串信息,自己修改返回的字符串信息即可,还有一些其他的修改我在这里就不详细说了,只需将对应的track部分都注释掉就行了,重点关注SampleApplicationSession文件,因为我是在native层进行update回调的,所以在onPostExecute方法里,执行的是native Callback,这三个修改好的文件可以在我的github仓库里clone

交给native层处理的有三个函数

- private native void startNativeCamera(); //启动摄像头

- private native void stopNativeCamera(); //释放摄像头

- private native void onVuforiaInitializedNative(); //注册回调

native层的先放着,先来处理java层,在java层里,Activity实际逻辑是在上图2号红框Cocos2dxActivity.java文件里,而并非在org.cocos2dx.cpp包下的AppActivity.java文件里

使用Cocos2dxActivity实现Vuforia的SampleApplicationControl接口,并实现该接口的onInitARDone和onVuforiaUpdate方法

private SampleApplicationSession vuforiaAppSession;

/** Native function to initialize the application. */

private native void initApplicationNative();

@Override

public void onInitARDone(SampleApplicationException exception)

{

initApplicationNative();

if (exception == null)

{

try

{

vuforiaAppSession.startAR(CameraDevice.CAMERA_DIRECTION.CAMERA_DIRECTION_FRONT);

// Log.e("myErr","startAR");

} catch (SampleApplicationException e)

{

Log.e("myErr", e.getString());

}

boolean result = CameraDevice.getInstance().setFocusMode(

CameraDevice.FOCUS_MODE.FOCUS_MODE_CONTINUOUSAUTO);

if (result);

else

Log.e("myErr", "Unable to enable continuous autofocus");

} else

{

Log.e("myErr", exception.getString());

}

}@Override

public void onVuforiaUpdate(State state)

{

Log.e("myErr", "update!");

}重载完了这两个方法后,此时Cocos2dxActivity和Vuforia的运行还是完全分开的,接下来在onCreate回调里对Vuforia初始化

//vuforia

vuforiaAppSession = new SampleApplicationSession(this);

// vuforiaAppSession

// .initAR(this, ActivityInfo.SCREEN_ORIENTATION_PORTRAIT);

vuforiaAppSession.initAR(this, ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE);到这步,java层的修改基本结束了,可以运行测试一下,会出现闪退,而且没有log错误信息,这是因为我们刚刚用到的native方法还没有实现,接下来,就涉及native层

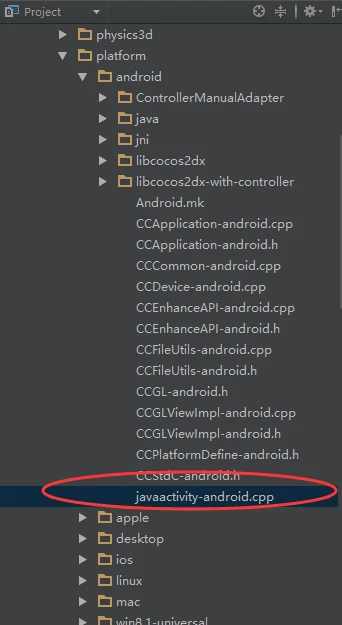

jni交互的地方可以在上图红框处找到,目录实际为${ProJect_Root}/cocos2d/cocos/platform/android/,

Project_Root为你的项目目录,本例中名称为cpp-vuforia-camera,在该目录下写你的native方法的实现

class Vuforia_updateCallback : public Vuforia::UpdateCallback

{

virtual void Vuforia_onUpdate(Vuforia::State& state)

{

LOGE("Vuforia_onUpdateNative!");

Vuforia::Image *imageRGB888 = NULL;

Vuforia::Image* imageGRAY = NULL;

Vuforia::Frame frame = state.getFrame();

for (int i = 0; i < frame.getNumImages(); ++i) {

const Vuforia::Image *image = frame.getImage(i);

if (image->getFormat() == Vuforia::RGB888) {

imageRGB888 = (Vuforia::Image*)image;

break;

}

else if(image->getFormat() == Vuforia::GRAYSCALE){

imageGRAY = (Vuforia::Image*)image;

break;

}

}

if (imageRGB888) {

JNIEnv* env = 0;

if ((JniHelper::getJavaVM() != 0) && (activityObj != 0)

&&(JniHelper::getJavaVM()->GetEnv((void**)&env, JNI_VERSION_1_4) == JNI_OK)

) {

// JNIEnv* env = JniHelper::getEnv();

const short* pixels = (const short*) imageRGB888->getPixels();

int width = imageRGB888->getWidth();

int height = imageRGB888->getHeight();

int numPixels = width * height;

jbyteArray pixelArray = env->NewByteArray(numPixels * 3);

env->SetByteArrayRegion(pixelArray, 0, numPixels * 3, (const jbyte*) pixels);

cv::Mat temp3Channel = cv::Mat(imageRGB888->getHeight(), imageRGB888->getWidth(), CV_8UC3, (unsigned char *)imageRGB888->getPixels());

pthread_mutex_lock(&mutex);

channel3Mat = temp3Channel.clone();

pthread_mutex_unlock(&mutex);

// imageBuffer = (char*)env->NewGlobalRef(pixelArray);

jclass javaClass = env->GetObjectClass(activityObj);

env->DeleteLocalRef(pixelArray);

// if (channel2Mat.data == NULL)

// LOGE("channel2Mat is NULL");

// else

// LOGE("channel2Mat get it!");

// JniHelper::getJavaVM()->DetachCurrentThread();

}

}

else if (imageGRAY) {

JNIEnv* env = 0;

// JniHelper::getJavaVM()->AttachCurrentThread((void**)&env, NULL);

if ((JniHelper::getJavaVM() != 0) && (activityObj != 0)

&&(JniHelper::getJavaVM()->GetEnv((void**)&env, JNI_VERSION_1_4) == JNI_OK)

) {

// JNIEnv* env = JniHelper::getEnv();

const short* pixels = (const short*) imageGRAY->getPixels();

int width = imageGRAY->getWidth();

int height = imageGRAY->getHeight();

int numPixels = width * height;

jbyteArray pixelArray = env->NewByteArray(numPixels * 1);

env->SetByteArrayRegion(pixelArray, 0, numPixels * 1, (const jbyte*) pixels);

cv::Mat gray_frame = cv::Mat(480, 640, CV_8UC1, (unsigned char*)pixels, 0);

// cv::Mat mat = cv::Mat(imageRGB888->getHeight(), imageRGB888->getWidth(), CV_8UC1, (unsigned char *)imageRGB888->getPixels());

cv::Mat tempGray = cv::Mat(imageGRAY->getHeight(), imageGRAY->getWidth(), CV_8UC1, (unsigned char *)imageGRAY->getPixels());

pthread_mutex_lock(&mutex);

// cv::cvtColor(temp3Channel, grayMat, CV_RGB2GRAY);

grayMat = gray_frame;

// imageBuffer = (char *)env->GetByteArrayElements(pixelArray, 0);

pthread_mutex_unlock(&mutex);

jclass javaClass = env->GetObjectClass(activityObj);

env->DeleteLocalRef(pixelArray);

}

}

}

};Vuforia_updateCallback updateCallback;

JNIEXPORT void JNICALL Java_com_vuforia_samples_SampleApplication_SampleApplicationSession_onVuforiaInitializedNative(JNIEnv * env, jobject)

{

LOGE("onVuforiaInitalizeNative!");

// Register the update callback where we handle the data set swap:

Vuforia::registerCallback(&updateCallback);

}JNIEXPORT void JNICALL Java_org_cocos2dx_lib_Cocos2dxActivity_initApplicationNative(JNIEnv* env, jobject obj)

{

activityObj = env->NewGlobalRef(obj);

}JNIEXPORT void JNICALL Java_com_vuforia_samples_SampleApplication_SampleApplicationSession_startNativeCamera(JNIEnv *, jobject, jint camera)

{

LOGE("Java_com_qualcomm_QCARSamples_ImageTargets_ImageTargets_startCamera");

// Start the camera:

if (!Vuforia::CameraDevice::getInstance().start())

return;

Vuforia::setFrameFormat(Vuforia::RGB888, true);

}JNIEXPORT void JNICALL Java_com_vuforia_samples_SampleApplication_SampleApplicationSession_stopNativeCamera(JNIEnv *, jobject)

{

LOGE("Java_com_vuforia_samples_ImageTargets_ImageTargets_stopCamera");

Vuforia::CameraDevice::getInstance().stop();

Vuforia::CameraDevice::getInstance().deinit();

}当然这里会用到Vuforia 和OpenCV的头文件,需要在 当前目录下的Android .mk里添加头文件索引目录,因为该 .mk文件是编译javaactivity-android.cpp的makefile

LOCAL_C_INCLUDES += E:/Libraries/vuforia-sdk-android-5-5-9/build/include

LOCAL_C_INCLUDES += E:/Libraries/opencv2410/build/include

LOCAL_EXPORT_INCLUDES += E:/Libraries/opencv2410/build/include添加完毕后,会出现 undefined reference to ‘Vuforia::CameraDevice::getInst

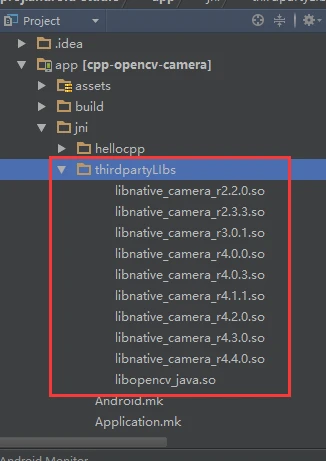

ance()’的error,这是为啥了?因为 我们还没使用vuforia的.so文件啊,Android平台上,Vuforia 的方法实现都在 这里面装着了,接下来,我们要做的是,将官方Vuforia的.so库和libopencv_java.so放置在我们的libs目录下

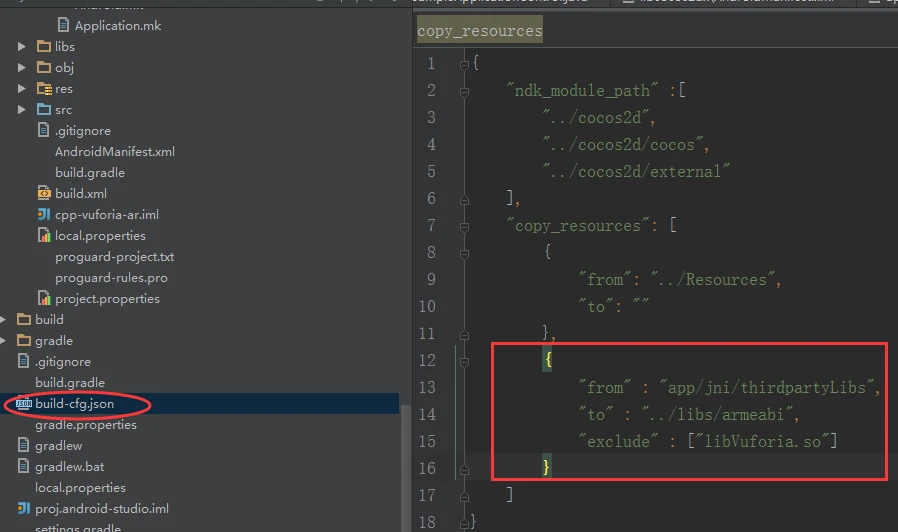

这个有很多种方法可以实现,对libopencv_java.so我使用的是通过Cocos2dx自带的build-cfg.json文件复制.so文件到给定目录,为什么这样做了?后面会和libVuforia.so的处理作对比来说明,如下图

其中,

from关键字是相对${Project_Root}/proj.android-studio/这个路径开始

to关键字是相对${Project_Root}/proj.android-studio/app/assets/这个路径开始

exclude关键字是排除某些文件

这是复制libopencv_java.so,然后是libVuforia.so

对libVuforia.so的使用我是在jni/Android.mk中修改,使其编译时使用

include $(CLEAR_VARS)

LOCAL_MODULE := Vuforia-prebuilt

LOCAL_SRC_FILES := thirdpartylibs/libVuforia.so

#LOCAL_C_INCLUDES := E:/Libraries/vuforia-sdk-android-5-5-9/build/include

LOCAL_EXPORT_C_INCLUDES := E:/Libraries/vuforia-sdk-android-5-5-9/build/include

include $(PREBUILT_SHARED_LIBRARY)为了保证工程的独立性,我把这两个库都放在jni/thirdpartyLibs/ 目录下,对这两个库使用不同的方式,一部分是因为如果我对libopencv_java.so使用同样的方式,会及其影响帧率,至于为什么,我还没深入了解,所以只是以资源文件的形式对其进行复制操作,而Vuforia的.so,编译时需要用到,否则还是报未定义引用的error

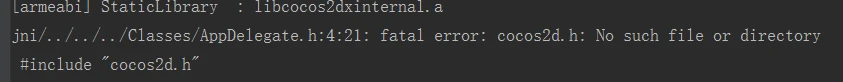

这样就成功了?哪有这么简单,在jni/Android.mk中添加上述Vuforia模块后,反正出现如下图错误

结果发现是因为添加这个预编译模块

LOCAL_STATIC_LIBRARIES += Vuforia-prebuilt

错误的写成了LOCAL_STATIC_LIBRARIES := Vuforia-prebuilt

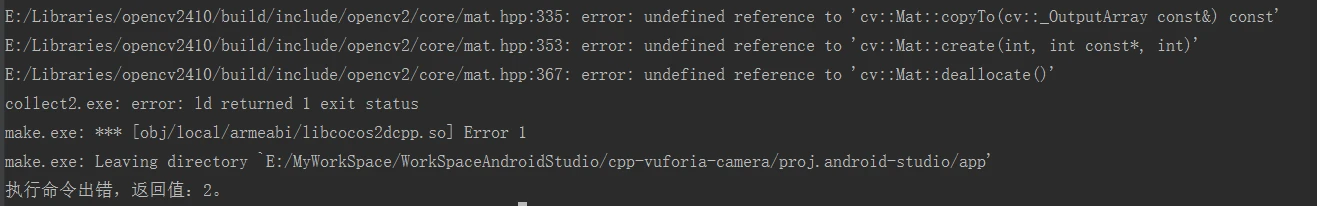

添加完后出现了OpenCV的error

这个是OpenCV.mk未添加到编译javaactivity-android.cpp的Android.mk中,也就是目录${ProJect_Root}/cocos2d/cocos/platform/android/,在该Android.mk的LOCAL_PATH := $(call my-dir)后添加

include $(CLEAR_VARS)

OPENCV_INSTALL_MODULES:=on

OPENCV_CAMERA_MODULES:=off

OPENCV_LIB_TYPE:=STATIC#下面这个目录是OpenCVforAndroid官方里的makefile文件

include E:/Libraries/OpenCV-2.4.10-android-sdk/sdk/native/jni/OpenCV.mk 现在应该没报错了,在这里有必要检测一下是否已经得到了camera的mat格式的图片

在javaactivity-android.cpp里channel3Mat为输出的RGB模式的Mat图片,因此只需判断该Mat数据是否为空就行了

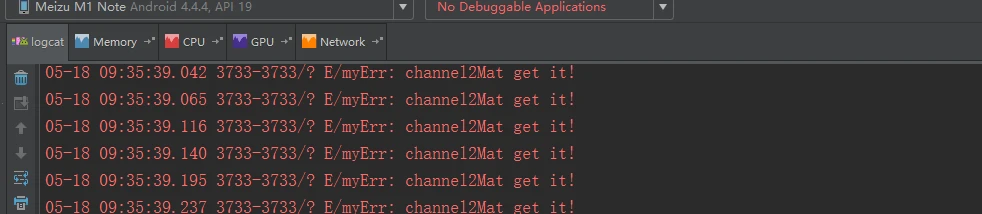

在我的代码里只需注释掉javaactivity-android.cpp文件中125~128行就OK了,然后查看log输出日志

如下图所示,输出”channel2Mat get it!”信息

到这步,就说明Vuforia已经把图传给native层了,但还没传到HelloWorld.cpp文件中啊,这步骤涉及的文件就比较多了,但是每个文件修改不大,只是单纯的修改一两个函数参数,

涉及的文件有:

$(Project_Root)/cocos2d/cocos/platform/android/目录下的

javaactivity-android.cpp,

CCApplication-android.h,

CCApplication-android.cpp,

和$(Project_Root)/cocos2d/cocos/platform/目录下的CCApplicationProtocol.h,

以及$(Project_Root)/Classes/目录下的

AppDelegate.h,

AppDelegate.cpp,

HelloWorldScene.h,

HelloWorld.cpp,

总共8个文件

- javaactivity-android.cpp 中将nativeInit方法里的run()修改为

run(&grayMat, &channel3Mat, &mutex)

- CCApplication-android.h 中修改run()为

run(cv::Mat*, cv::Mat*, pthread_mutex_t*)

- CCApplication-android.cpp 中修改run()以及run方法体里的applicationDidFinishLaunching()分别为

run(cv::Mat* gray, cv::Mat* mat, pthread_mutex_t* mutex)

applicationDidFinishLaunching(gray, mat, mutex)

- CCApplicationProtocol.h 中修改applicationDidFinishLaunching() = 0 为

applicationDidFinishLaunching(cv::Mat*, cv::Mat*, pthread_mutex_t*) = 0

- CCApplicationProtocol.h 中添加

#include <opencv2/opencv.hpp>

- AppDelegate.h中修改applicationDidFinishLaunching()为

applicationDidFinishLaunching(cv::Mat*, cv::Mat*, pthread_mutex_t*)

- AppDelegate.cpp中修改applicationDidFinishLaunching()以及createScene()分别为

applicationDidFinishLaunching(cv::Mat* gray, cv::Mat* mat, pthread_mutex_t* mutex), createScene(gray, mat, mutex)

- HelloWorld.h中修改createScene()为

createScene(cv::Mat*, cv::Mat*, pthread_mutex_t*)

- HelloWorld.cpp中修改createScene()为

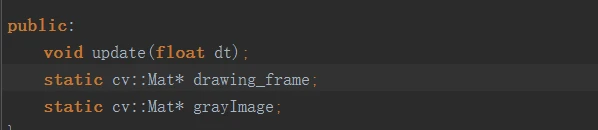

createScene(cv::Mat* gray, cv::Mat* channel3Mat, pthread_mutex_t* mutex)到此,在Vuforia native层get到的相机捕获图片已经传到了我们熟悉的HelloWorld.cpp中了,形参channel3Mat即为RGB888模式的图片,gray为灰度图,mutex是为防止Vuforia update写Mat数据和Cocos2dx读Mat数据而加的锁,可以在HelloWorld.cpp中输出Log信息测试一下, 在HelloWorld.h中,HelloWorld类下重载update方法,添加

void update(float dt);如下图所示, 需要添加两个静态Mat指针, 用来接收传过来的指向存有Mat图像数据的指针

然后在HelloWorld.cpp里添加下面代码,对变量初始化

pthread_mutex_t* mutex1 = NULL;

cv::Mat* HelloWorld::drawing_frame = NULL;

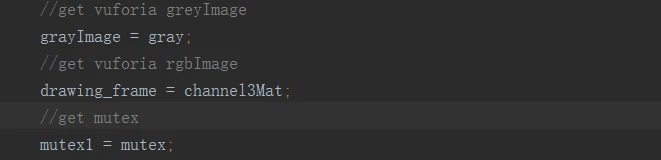

cv::Mat* HelloWorld::grayImage = NULL;然后开始在createScene方法体里添加下面代码,以进行接收传来的图片数据

grayImage = gray;

drawing_frame = channel3Mat;

mutex1 = mutex;如下图所示

在update方法体里对获得的数据进行测试,判断是否有得到图片数据

本例源码下载

使用OpenCV VideoCapture类捕获摄像头图片

说明:再次强调, 这种方法只适用于Android 5.0之前的版本, 所以对于现在高版本的Android机, 都不适用

同样是新建工程, 导入AS中, 先编译一遍, 同样是HelloWorld的显示界面

需要修改的文件有:

HelloWorld.h

HelloWorld.cpp

$(Project_Root)/proj.android-studio/app/AndroidManifest.xml

$(Project_Root)/proj.android-studio/app/jni/Android.mk

$(Project_Root)/proj.android-studio/build.json

- HelloWorld.h的类成员中添加

//add includes before adding the following codes and after #include "cocos2d.h"

//#include <opencv/core/core.h>

//#include <opencv2/opencv.hpp>

public:

cocos2d::Sprite* videoFrame;

protected:

virtual void update(float delta);

private:

cv::Size calc_optimal_camera_resolution(const char* supported, int width, int height);

cv::Ptr<cv::VideoCapture> capture;

- HelloWorld.cpp中首先在init()方法中打开摄像头,计算可以获取图像的分辨率

this->capture = new cv::VideoCapture(1);

union {double prop; const char* name;} u;

u.prop = capture->get(CV_CAP_PROP_SUPPORTED_PREVIEW_SIZES_STRING);

int view_width = visibleSize.width;

int view_height = visibleSize.height;

cv::Size camera_resolution;

if (u.name)

camera_resolution = calc_optimal_camera_resolution(u.name, visibleSize.width, visibleSize.height);//cv::Size(720,480);

else

{

LOGE("Cannot get supported camera camera_resolutions");

camera_resolution = cv::Size(visibleSize.width,

visibleSize.height);

}

if ((camera_resolution.width != 0) && (camera_resolution.height != 0))

{

this->capture->set(CV_CAP_PROP_FRAME_WIDTH, camera_resolution.width);

this->capture->set(CV_CAP_PROP_FRAME_HEIGHT, camera_resolution.height);

}

//resolutions of HelloWorld2.png is 1024x768, you can add a image to Resources dir

videoFrame = Sprite::create("HelloWorld2.png");

// position the sprite on the center of the screen

videoFrame->setPosition(Vec2(visibleSize.width/2 + origin.x, visibleSize.height/2 + origin.y));

// add the sprite as a child to this layer

this->addChild(videoFrame, 3);//calc_optimal_camera_resolution方法的实现

cv::Size HelloWorld::calc_optimal_camera_resolution(const char* supported, int width, int height)

{

int frame_width = 0;

int frame_height = 0;

size_t prev_idx = 0;

size_t idx = 0;

float min_diff = FLT_MAX;

do

{

int tmp_width;

int tmp_height;

prev_idx = idx;

while ((supported[idx] != '\0') && (supported[idx] != ','))

idx++;

sscanf(&supported[prev_idx], "%dx%d", &tmp_width, &tmp_height);

int w_diff = width - tmp_width;

int h_diff = height - tmp_height;

if ((h_diff >= 0) && (w_diff >= 0))

{

if ((h_diff <= min_diff) && (tmp_height <= 720))

{

frame_width = tmp_width;

frame_height = tmp_height;

min_diff = h_diff;

}

}

idx++; // to skip comma symbol

} while (supported[idx - 1] != '\0');

return cv::Size(frame_width, frame_height);

}之后可以先测试是否有得到摄像头捕获的帧

//test whether VideoCapture has grabed frame

void HelloWorld::update(float dt)

{

cv::Mat drawing_frame;

if (!this->capture.empty())

{

if (this->capture->grab())

this->capture->retrieve(drawing_frame, CV_CAP_ANDROID_COLOR_FRAME_RGB);

if( drawing_frame.empty())

LOGE("KONGKONGKONGKONG");

else

LOGE("HAHHAHA");

}

}

- AndroidManifest.xml中添加Camera权限

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.CAMERA"/>

<uses-feature android:name="android.hardware.camera" android:required="false"/>

<uses-feature android:name="android.hardware.camera.autofocus" android:required="false"/>

<uses-feature android:name="android.hardware.camera.front" android:required="false"/>

<uses-feature android:name="android.hardware.camera.front.autofocus" android:required="false"/>

- Android.mk中在 LOCAL_MODULE := cocos2dcpp_shared 添加OpenCV.mk

#OPENCV_CAMERA_MUDULES :=off

OPENCV_INSTALL_MODULES :=off

OPENCV_LIB_TYPE := SHARED

include E:/Libraries/OpenCV-2.4.10-android-sdk/sdk/native/jni/OpenCV.mk

//LOCAL_C_INCLUDES := $(LOCAL_PATH)/../../../Classes 下添加

LOCAL_C_INCLUDES += E:/Libraries/opencv2410/build/include有木有觉得忘了添加OpenCV的.so文件?

最重要的OpenCV Camera模块的实现都在这里, 如下图

如何把这个目录下的文件拷贝到libs目录下了?为啥是拷贝,我有测试过在Android.mk中添加

OPENCV_CAMERA_MUDULES :=on

OPENCV_INSTALL_MODULES :=on把OpenCV的一些库安装到Libs目录,但这会极其影响帧率,这可能是把库文件打包到apk里了吧,知道的大神可以交流下

- build.json文件中同之前Vuforia修改build.json文件几乎一样, 修改copy_resources

"copy_resources": [

{

"from": "../Resources",

"to": ""

},

{

"from": "app/jni/thirdpartyLibs",

"to": "../libs/armeabi"

}

]最终的update方法里是这样的

void HelloWorld::update(float dt)

{

cv::Mat drawing_frame;

if (!this->capture.empty())

{

if (this->capture->grab())

this->capture->retrieve(drawing_frame, CV_CAP_ANDROID_COLOR_FRAME_RGB);//RGB颜色模式

if( drawing_frame.empty())

LOGE("KONGKONGKONGKONG");

else

{

//LOGE("HAHHAHA");

//show image and check for user input

auto texture = new Texture2D;

texture->initWithData(drawing_frame.data,

drawing_frame.elemSize() * drawing_frame.cols * drawing_frame.rows,

Texture2D::PixelFormat::RGB888,

drawing_frame.cols,

drawing_frame.rows,

Size(drawing_frame.cols, drawing_frame.rows));

this->videoFrame->setTexture(texture);

texture->autorelease();

}

}

}